Internship at NCS (SAS)

During my 3rd year of Polytechnic, I took part in an internship at NCS as an Data Analyst Intern. There gained valuable work experience and exposure, such as working and communicating with co workers. I also got to work in a real world production and development environment which gave me more insight into the DevOps process. I was able to learn and apply various data processing, engineering and staging methods while conducting all of it in SAS which is a new language and software suite I picked up.

"Smart Virtual Counsellor" done for SkillsFuture (Full Stack, Tensorflow, MongoDB)

For my Final Year Project, I worked with Skills Future to create a Smart Virtual Counsellor, a website where users can input their resumes and be recommended jobs that are relevant to them. It also shows them what skills they might be lacking for different jobs and recommends them courses to learn those skills. We did this by using OCR to read resumes, then sending it to an AI job recommender engine that uses the info in the users resume to recommend them jobs.

(Click for more)

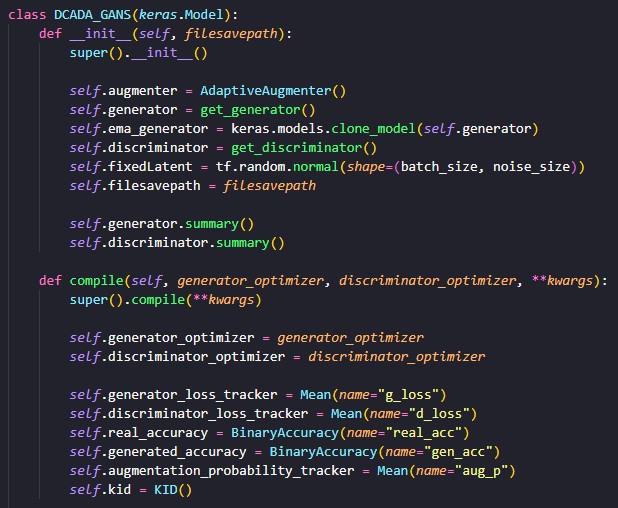

DEEP CONVOLUTIONAL ADAPTIVE DISCRIMINATOR AUGMENTATION GANs WITH CIFAR10 (Tensorflow/Keras)

My first experience building and training a Generative Adversarial

Network using the CIFAR10 dataset. Where I explored an adaptive

discriminator augmentation method used in state of the art GAN

models. The aim of this project was to generate 1000 new images

using the CIFAR10 dataset.

(Click for more)

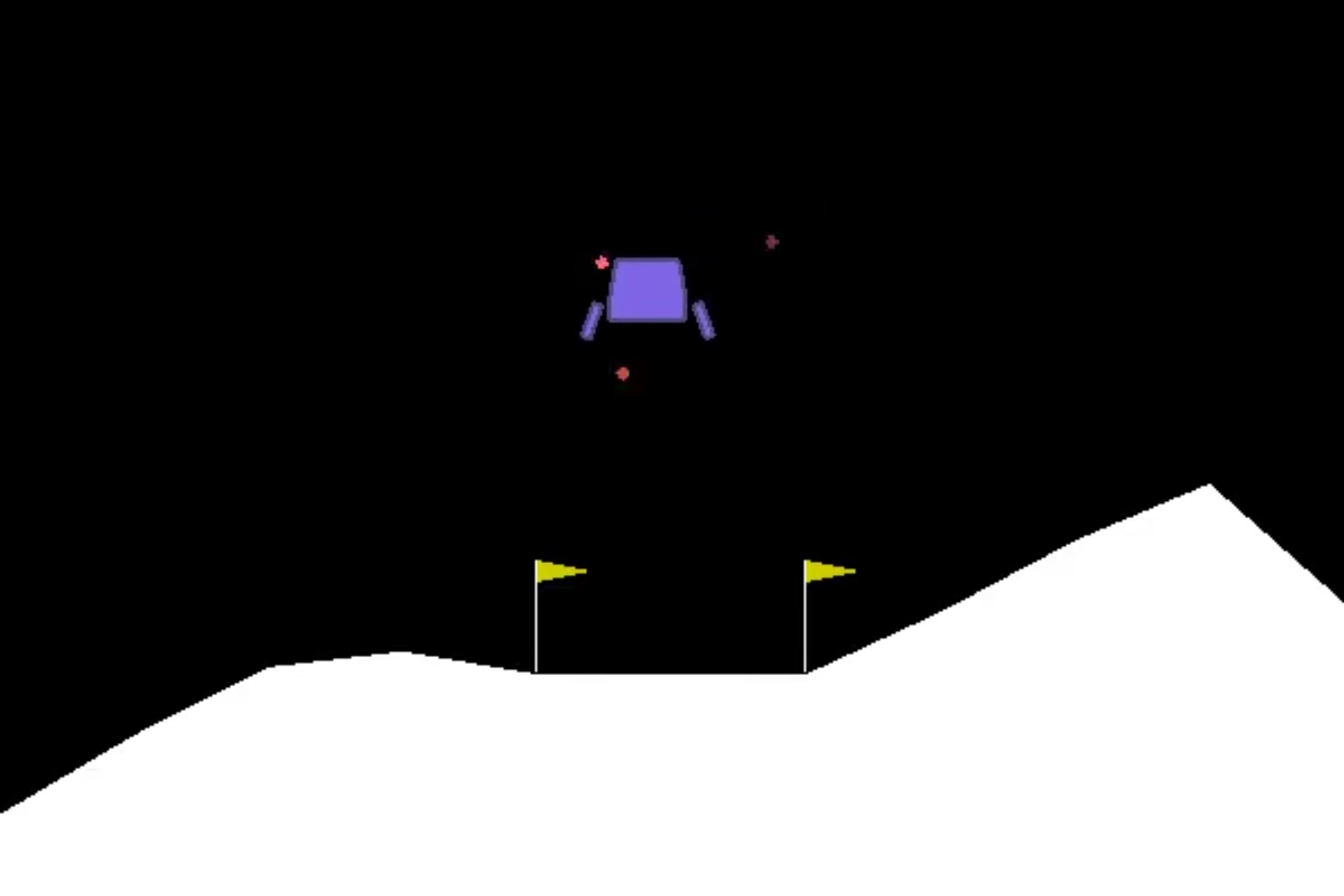

LANDING A LUNAR LANDER USING REINFORCEMENT LEARNING (Tensorflow/Keras)

My first try at building and training a Reinforcement Learning

algorithm. The objective was to solve the LunarLander v2 environment

in the OpenAI gym. I approached it by using a Double Deep Q Learning

Network while also trying the Deep Q Learning Network.

(Click for more)

ADVANCED DATA PREPROCESSING, ANALYTICS AND VISUALISATION ON LARGE DATASET AND SETTING UP MACHINE LEARNING PIPELINE (Python, scikit-Learn, Tableau)

The aim of this project was to find out if a taxi driver was considered a safe or dangerous driver based off driving telemetry. We worked with a dataset with 7million+ rows and used advanced data preprocessing methods such as feature generation, feature aggregation, outlier treatment and data balancing using SMOTE and undersampling. We then developed a machine learning pipeline to train a model to predict if a Taxi driver is a safe or dangerous driver based off their telemetry. We also used Tableau to visualise some of our findings about the relationships between various telemetry readings and a safe or dangerous classification.

SIGNAL, YOUR SIGN LANGUAGE INTERPRETOR (Python, HTML, Flask, Tensorflow/Keras)

Signal is a website that allows its users to video call each other

using a peer to peer connection. During the video call, users are

able to use sign language to communicate and the website will be

able to track the users hands and try to predict what sign language

the user is showing. It will then show the prediction on the screen

of both users allowing people who do not know sign language to be

able to understand the signs.

(Click for more)

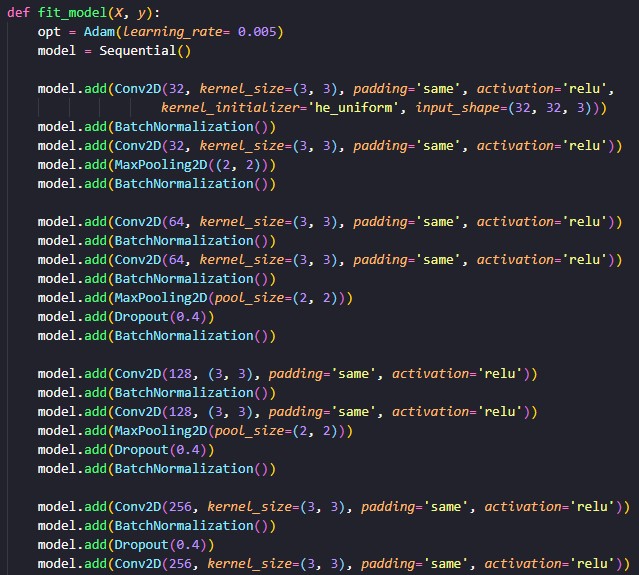

IMAGE CLASSIFICATION USING CONVOLUTIONAL NEURAL NETWORK ON THE CIFAR10 DATASET (Python, Tensorflow/Keras)

This is my first attempt at building and training a convolutional neural network. The aim of this project was to build a CNN that is able to classify the images in the CIFAR10 dataset. After lots of research while doing this project, I have gained a deeper understanding of how the CNN architecture works and the different layers within it.

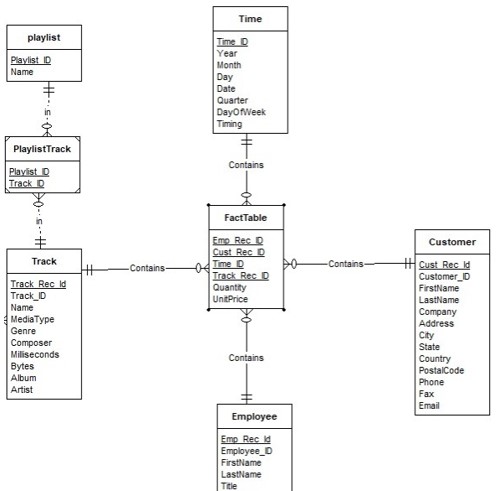

DATA WAREHOUSE AND PREPROCESSING FOR A MUSIC STORE (Python, SQL)

The aim of this project was to do preprocessing on the Excel dataset in Python using tools such as Pandas and Numpy. After doing preprocessing such as cleaning dirty data, removing invalid rows and standardising the format of the cells. I then created a ERD diagram for the SQL database. I then create a SQL database based to the ERD diagram and coded a pipeline to load the preprocessed data from Python to SQL. Afterwards I was able to query the data using SQL and come up with some meaningful insights.

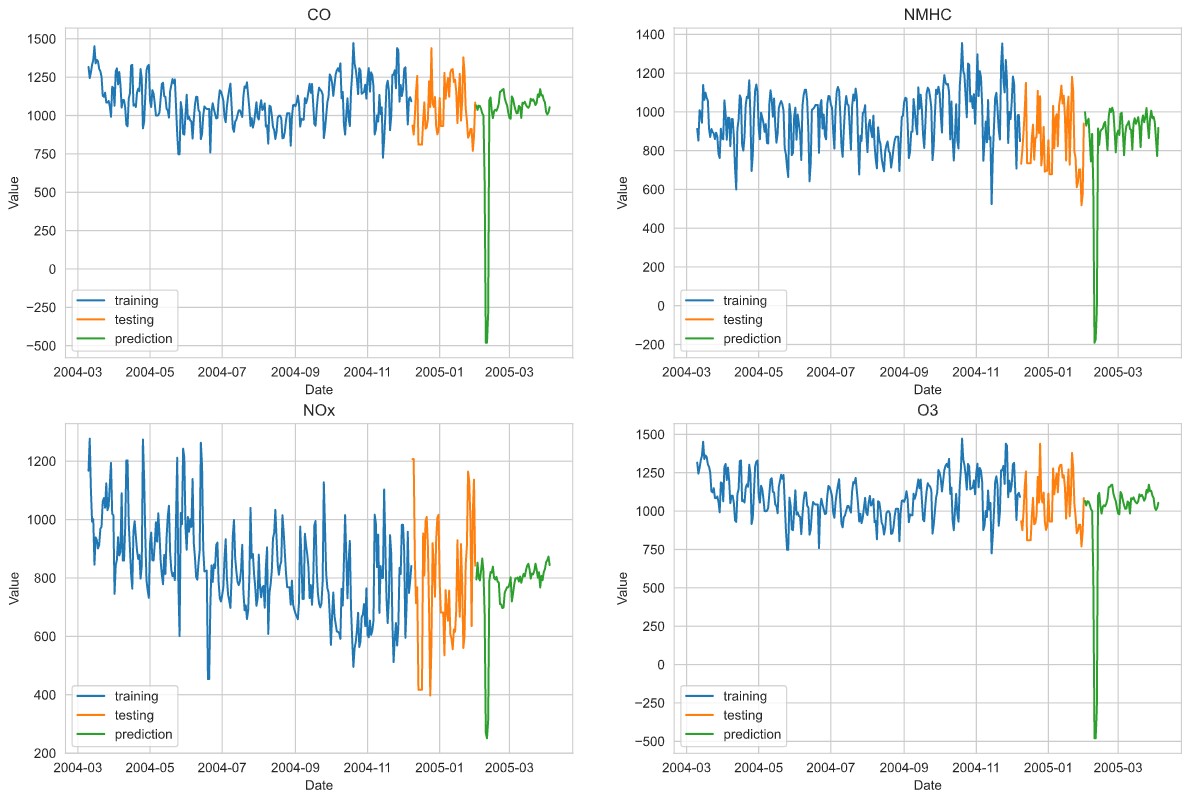

TIME SERIES USING ARIMA ON AIR POLLUTION DATASET (Python)

The aim of this project was to create a time series model that was able to predict the next 60 days of values for each gas in the air pollution dataset. I used various forms of data engineering such as outlier removal, AdFuller stationary test. Seasonal Decomposition to check for trends and seasonality. Conducted gridsearch on my final model to find the optimal parameters that is used for the best performing model. Then I use the final model to predict the values for each gas for the next 60 days.

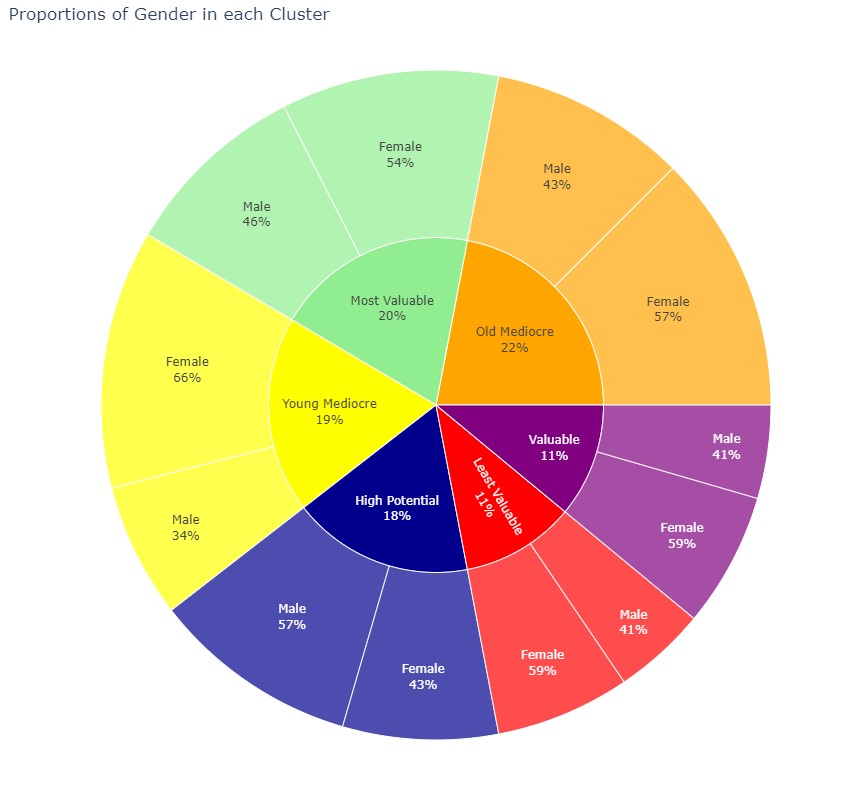

DATA CLUSTERING ON MALL CUSTOMERS DATASET (Python)

For this project, I did data clustering on the Mall Customers dataset. I trained multiple clustering algorithms and found the optimal parameters for everyone of them. I then picked the model that produced the best and cleanest result. After choosing my final model, I conducted some visual analysis on how the data was clustered and came up with 6 different types of demographics that I found within the Mall Customers dataset. Finally, I analysed and explained the key features of each demographic and ranked them based on if they were valuable customers in the mall.

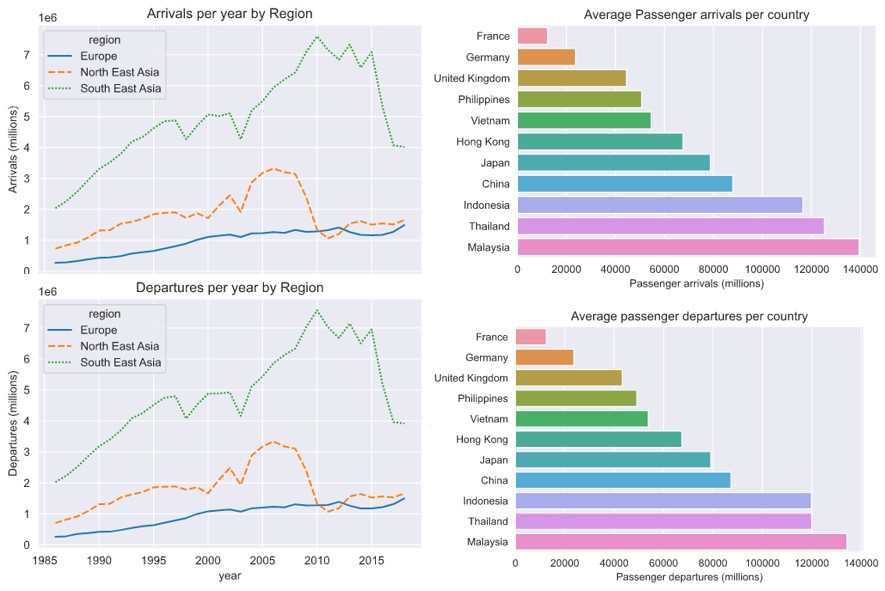

DATA PREPROCESSING, ANALYSIS AND VISUALISATION ON AIR PASSENGERS AND CARGO DATASET (Python, Tableau)

This was my first project where I did data engineering, preprocessing, analysis and visualisation. First, I did data engineering by merging multiple datasets such as passenger arrivals and passenger departures. I then did the usual data preprocessing by removing null values, outliers and normality tests. Next, I visualised the data and performed analysis on it and came out with recommendations for my objectives.